LIGHT AND SOUND

I decided to tackle my first assignment: make a plant sing. I figured setting up a serial connection with p5 and using one of its sound-related libraries could work!

I decided to aim for the sound of a theremin because as an instrument, it operates on multiples axes and encourages dimensional interactions. Using p5.Oscillator, I set up a system where the signal received from capacitive input would result in a specific sound and pitch. I also realised I don’t know alot about musical terminology so I hope I’m not getting terms mixed up.

Here’s an ungrounded version of the plant theremin: it’s quite noisy and I think takes signals from everywhere, not just the plant.

However, I found that if I actually charge my laptop, it somehow grounds the entire system and my resistor senses so much better! Something interesting to note here is how the pitch always returns to the exact same frequency (254) on touch.

I also came across this installation piece titled 'Plant Machete.' The artist, David Bowen equips a philodendron plant with a machete and allows it to perform self-defence, with slashes and stabs of the weapon being dictated by signals received from the plant. It’s a fascinating combination of mechanical and natural systems and conveys the true ‘living’ nature of a plant well.

I also particulary like the use of human-like behaviours when applied to an entity that could be considered its ‘victim’.

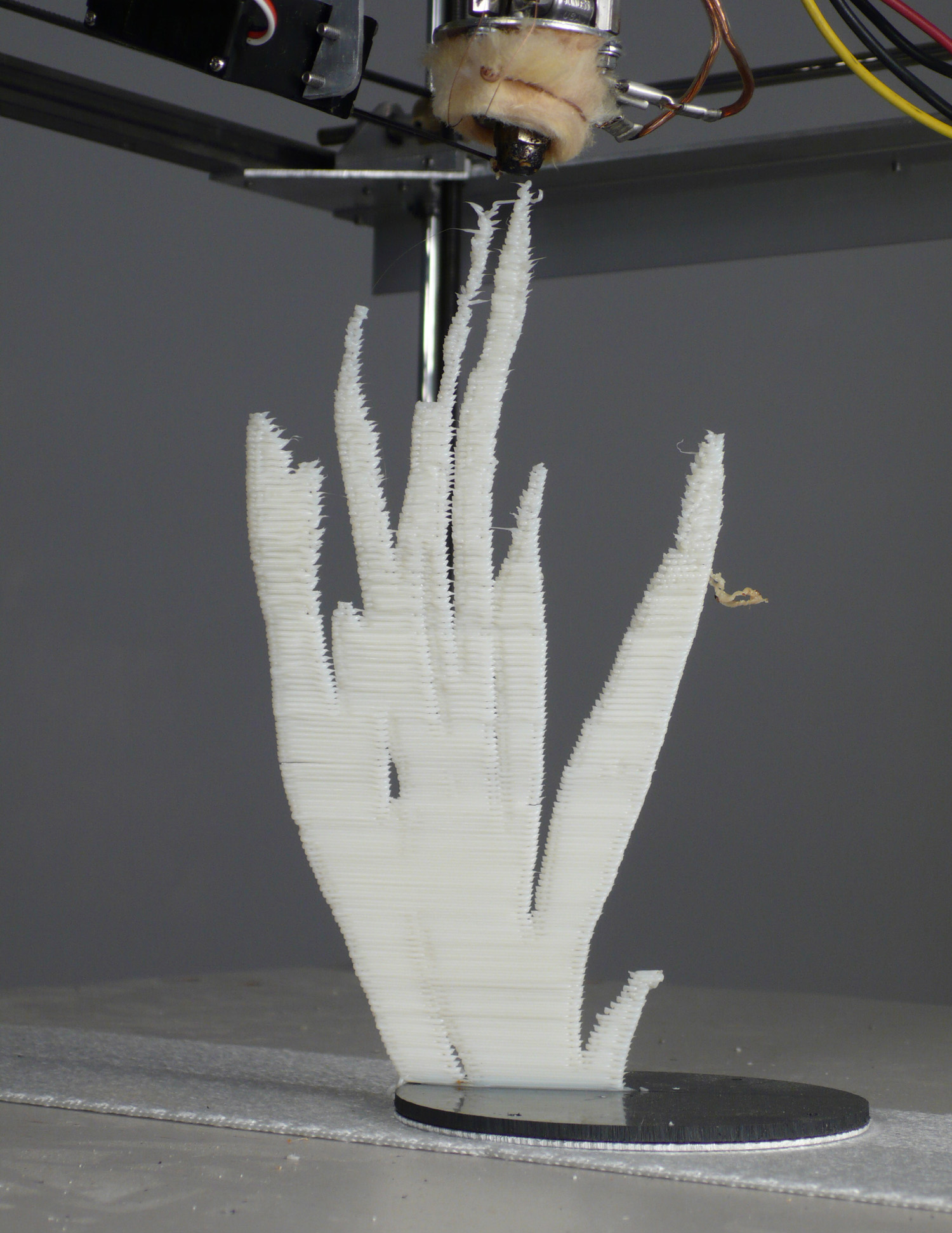

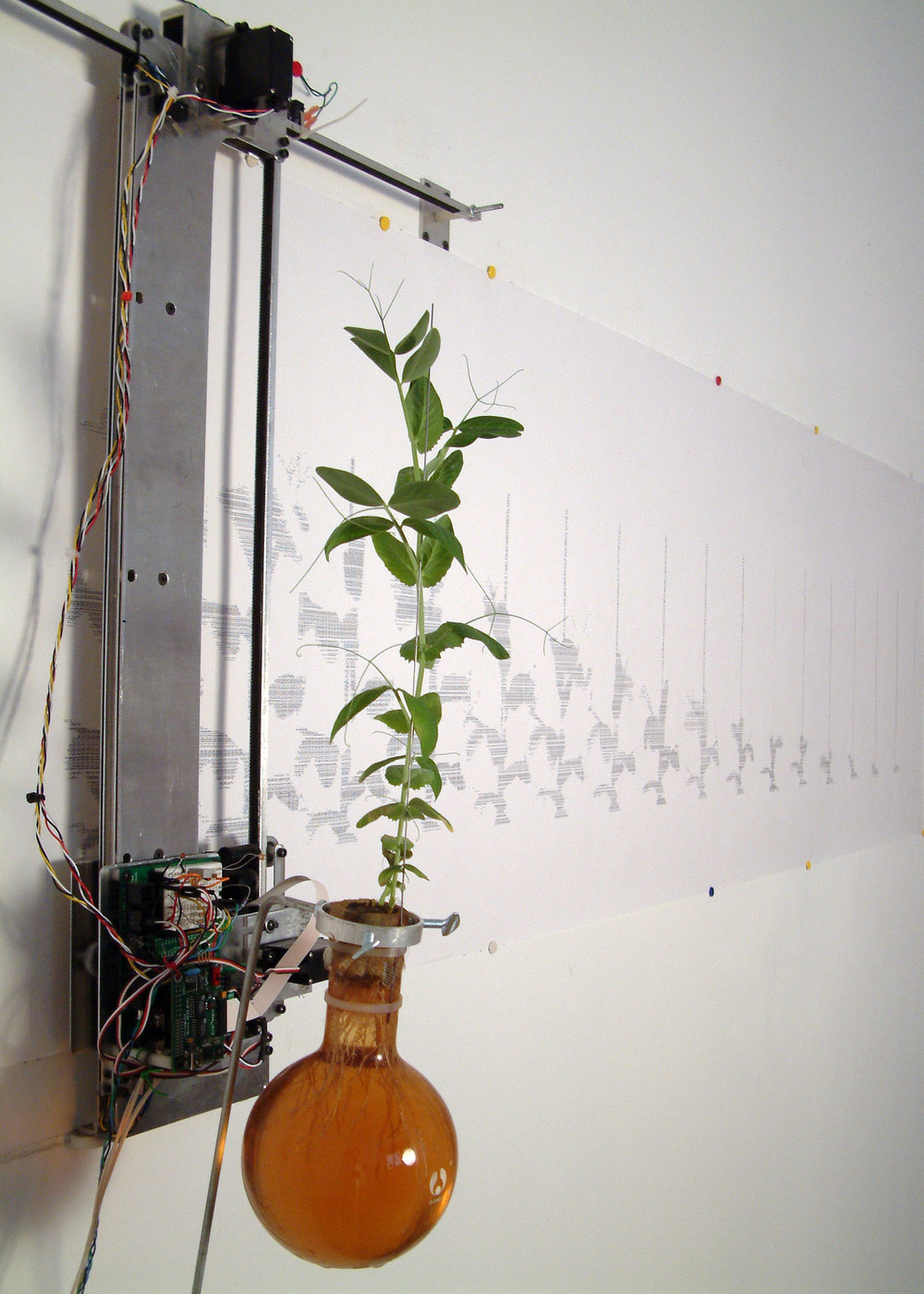

David Bowen also has a couple of other really interesting projects that look into studying, tracking and documenting growth in plants. My favourite is this one where he uses 3D printing and machine-making to reconstruct the form of the plant at each of its stages.

I wondered how I could also apply these more systematic, scientific forms of study to objects while expressing them through a creative outlet, like Bowen does. In order to track growth, I have been taking static pictures of my plant collection, but what if I 3D scan them instead? Would it be easier to spot nuances?

My 3D scans are not perfect but if I am consistent and do it over a period of time, I would definitely be able to trace out specific growth to a greater level of detail when compared to a simple photo.

I also came across this website called ‘Plants in Motion’ by Roger P. Hangarter which is a repository of timelapses! Even though it may not seem like it, plants move of their own volition according to external stimuli. It just so happens that these movements occur over a prolonged period of time, which us impatient people can sometimes miss.

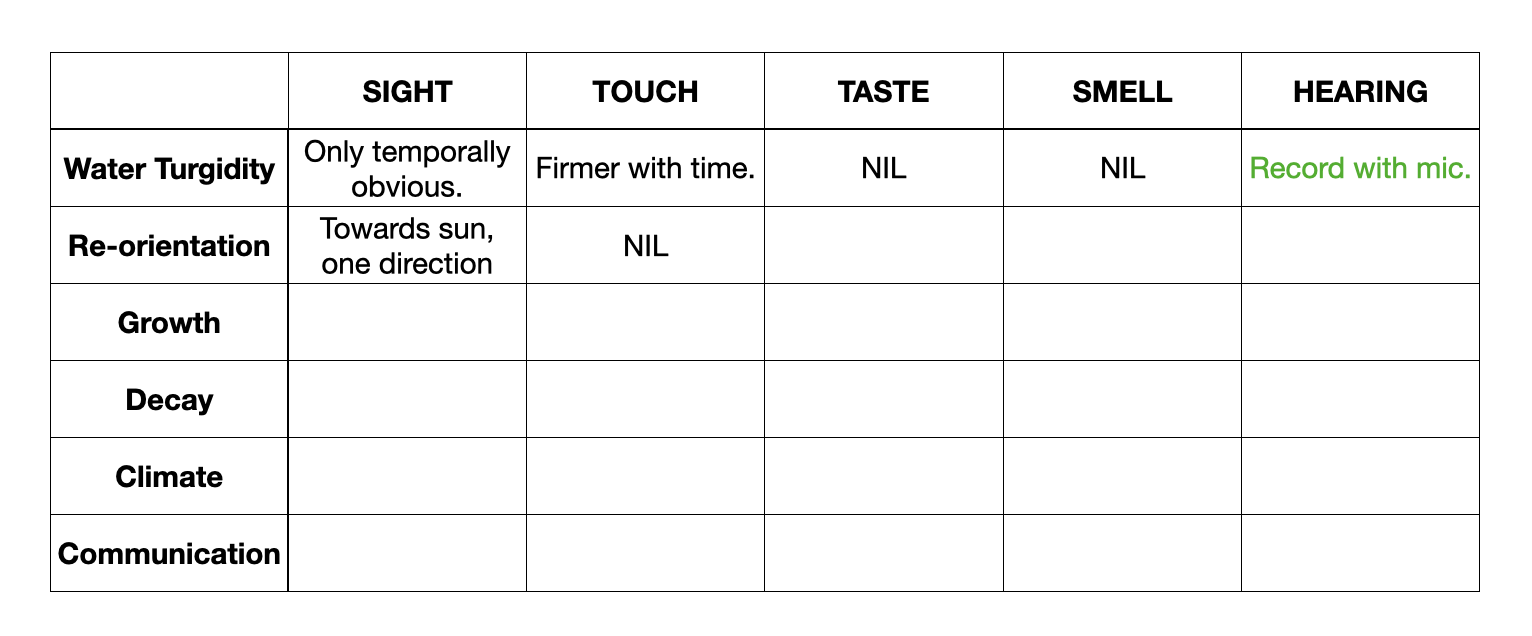

I decided to set up a table that maps the five human senses to tropic and nastic movements of a plant. Over time, and over the December holidays especially, I hope to populate the table with extensive detailed observations.

Projection Mapping

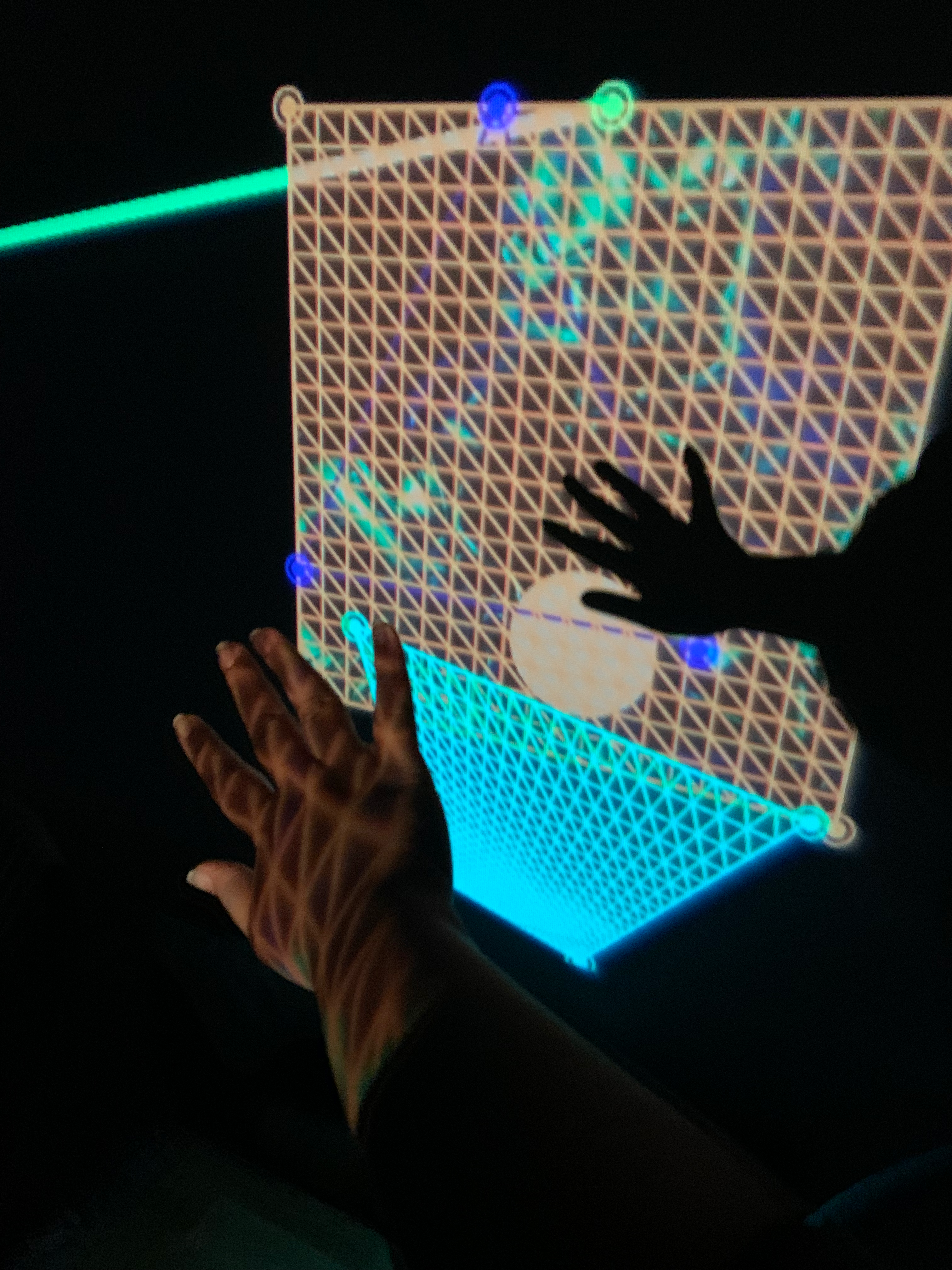

For the light experiment, I wanted to explore how projection mapping could play a role and actuate plant signals and inputs. Previous existing Living Media Interface projects utilize screen to their advantage, but I want to explore how superimposing a visual onto the form of the plant itself can add value to the experience by targeting specific areas of a leaf or allowing for more interconnected self-expression.

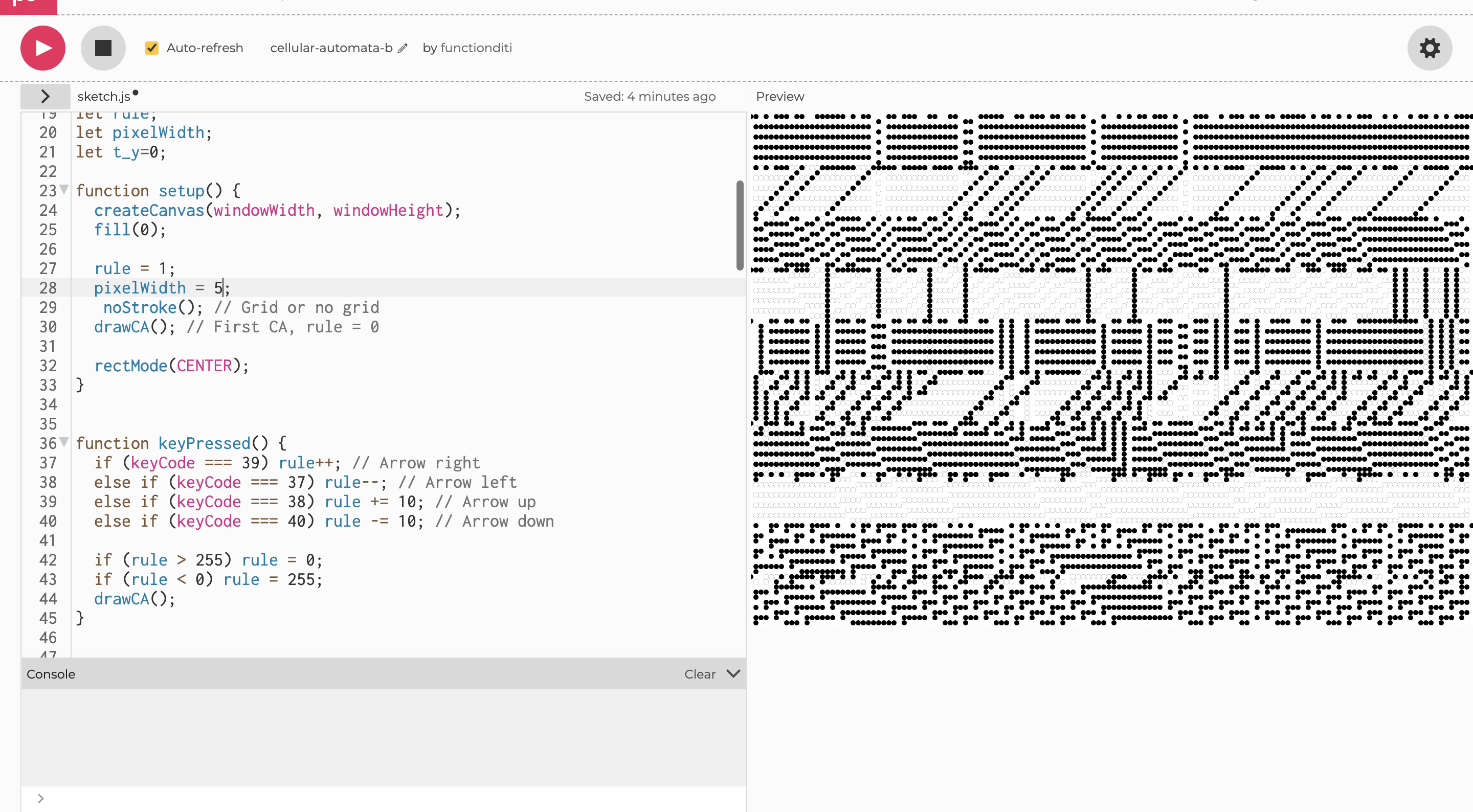

I began with Cellular Automata as a starting visual because its own algorithms are derived from systematic processes already existing in nature, and serves as a foundational basis for some organic patterns found on shells, leaves and other naturally-occurring substances. Cellular Automata is also very mathematically-driven, so using numerical data in collaboration with it should be doable.

I got my hands on a projector and projected the CA pattern onto a wall to understand scale.

Now that I had a basic code set up, I wanted to projection map onto a leaf surface so I could begin to curate and fine tune my p5 sketches. I remember back in year 1 Computation in Design, we did the exact same thing: projected CA onto geometrical shapes. Let’s try organic now!

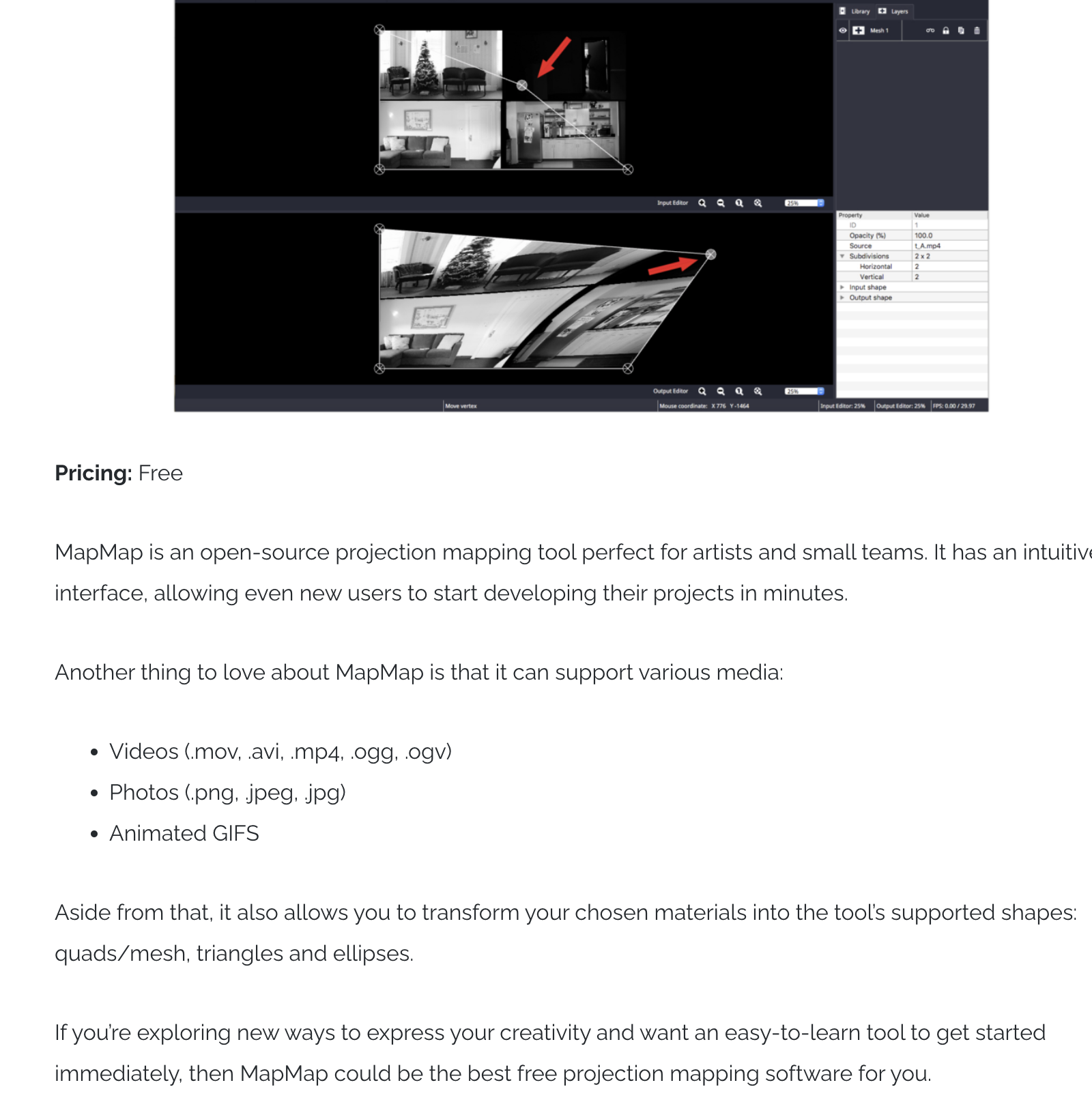

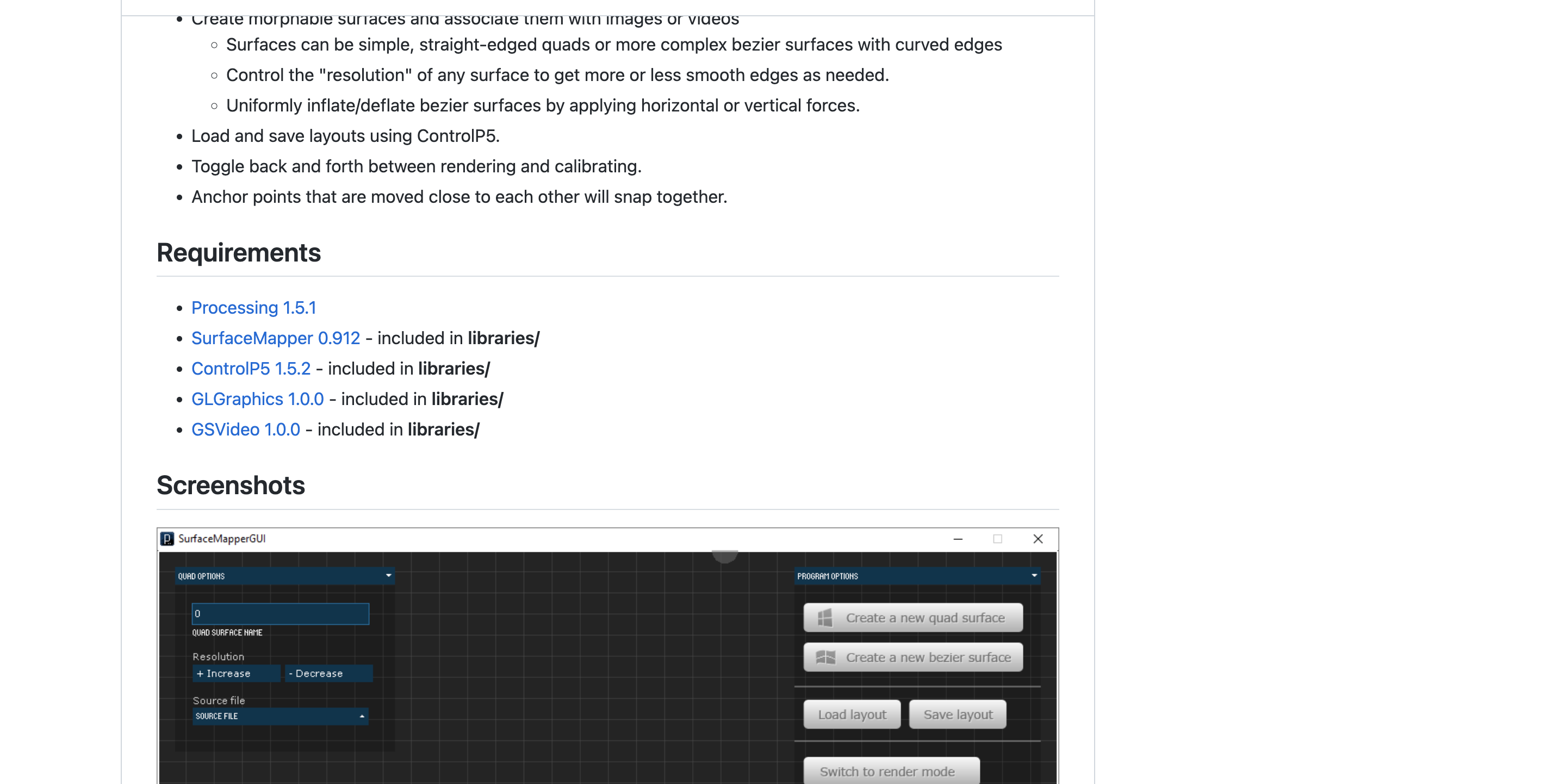

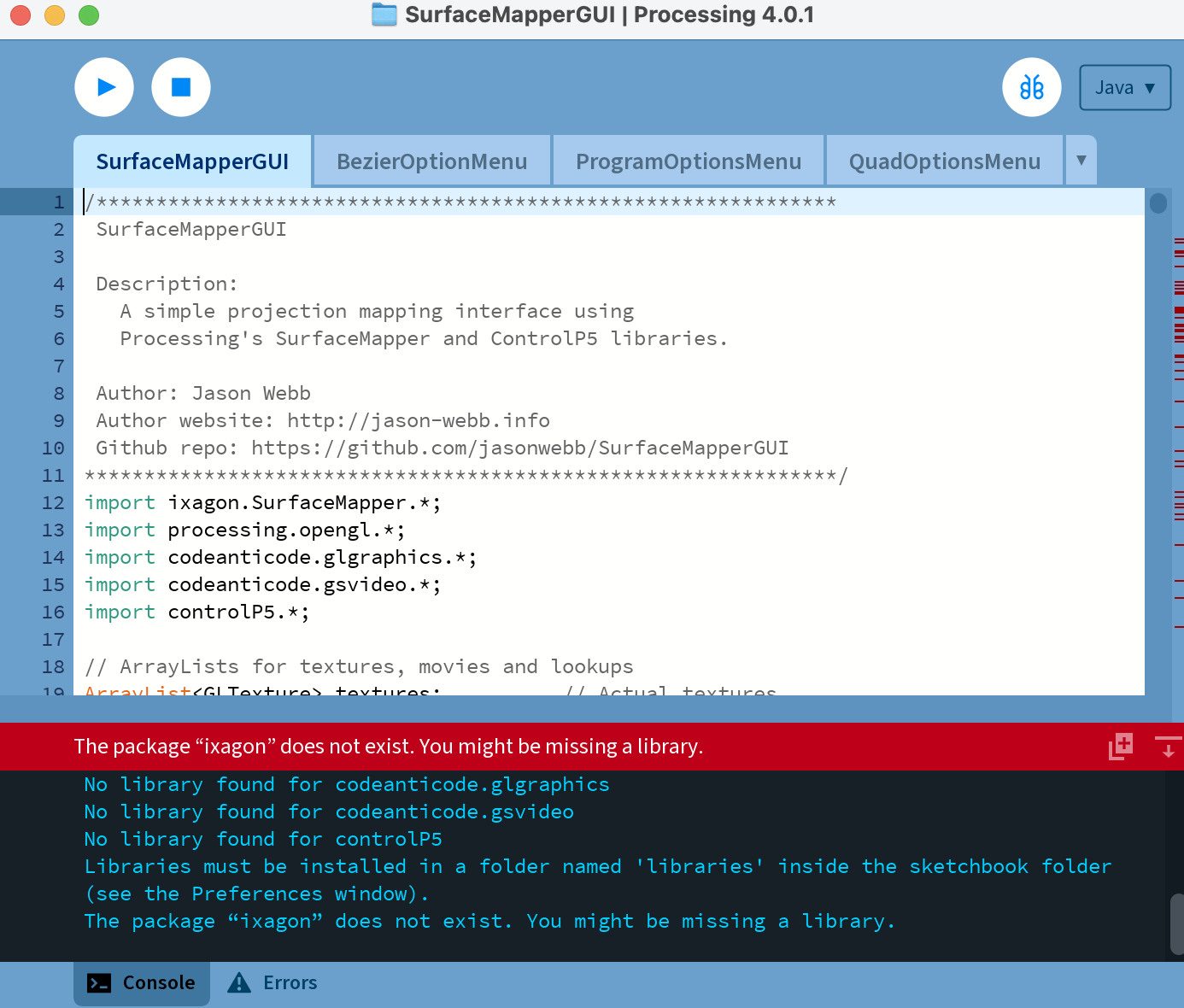

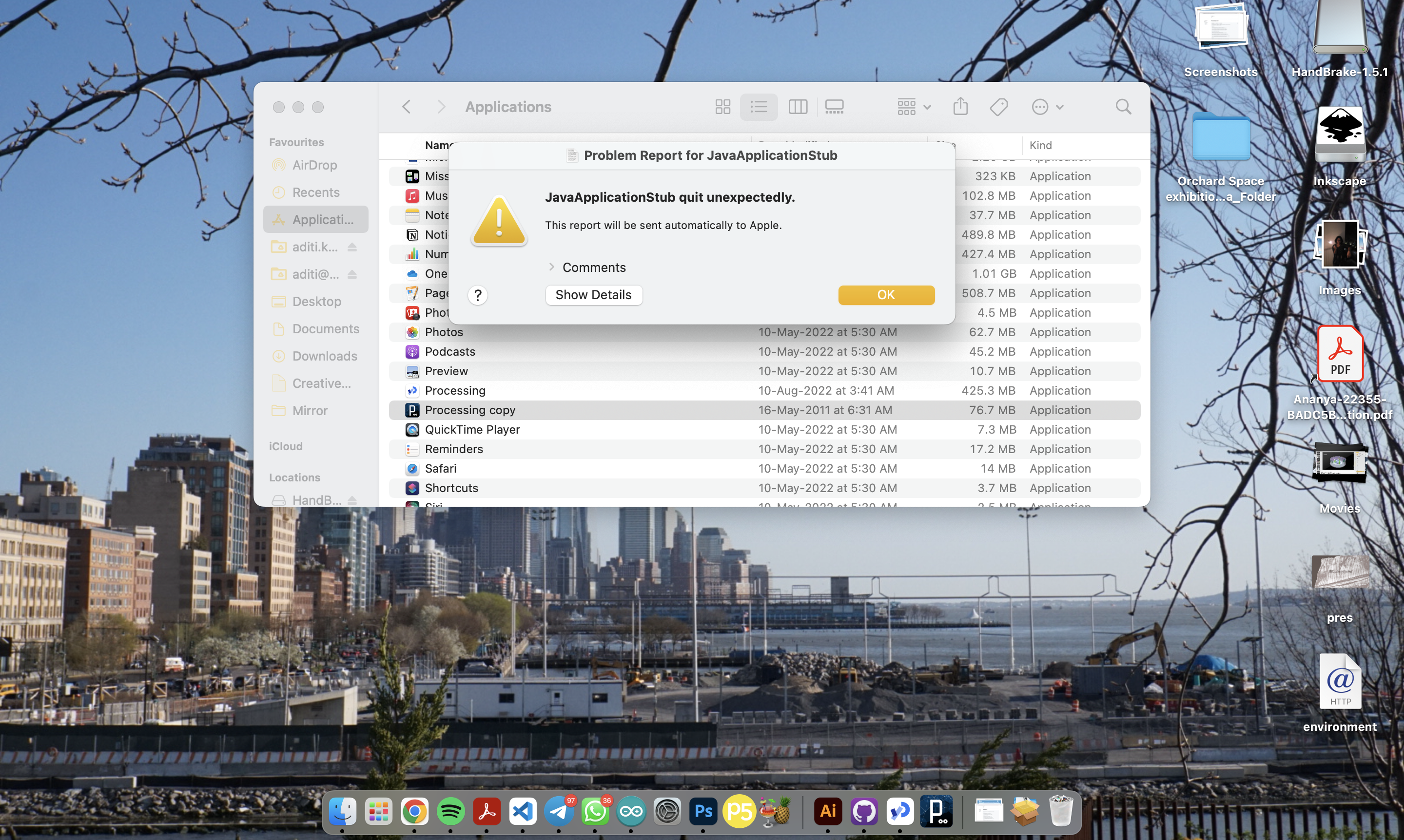

I looked into a couple of softwares that support projection mapping. While mapmap was outdated on my OS, I did find a github library called Surface Map that I tried to install, and guess what, it is dependent on a library written by Andreas, hello!

Well, too bad because my laptop refused to support Processing version 1.5 so I gave up on that avenue.

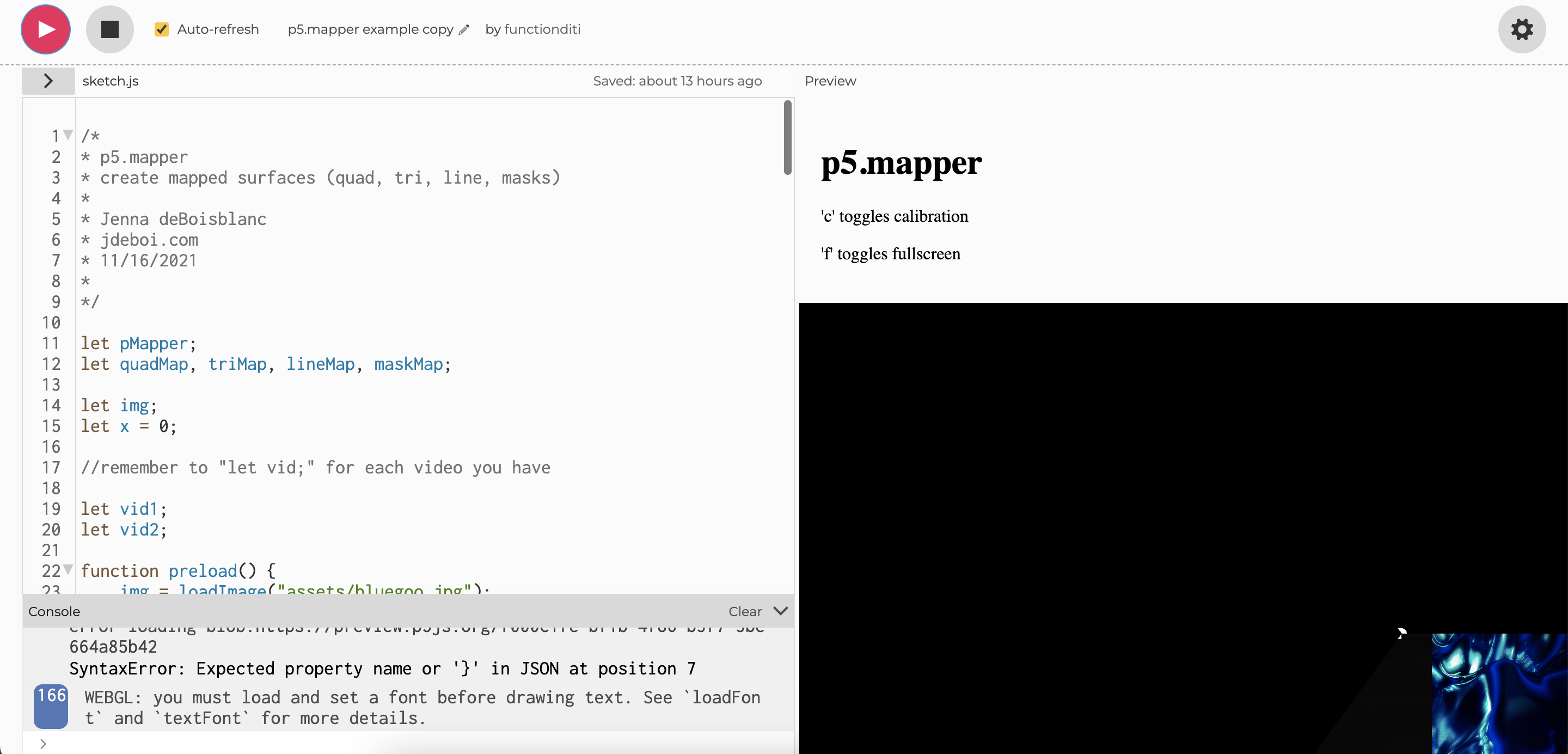

Instead, I found a p5.js projection mapping code adapted by Jo and aimed to use that instead. However, it only supports geometrical shapes, so I would have to give up some degree of fidelity when projecting.

Reflections

In the sound setup, interactions with the plant (when grounded, too noise when ungrounded) were successful because a distinct audible change could be heard once the interaction was performed. This opens up avenues for further plant-driven musical experimentation, and perhaps more variety in terms of sound and selectivity in terms of sound narrative can be considered.

On the other hand, the light projection-mapping cellular automata system does not work because the light is unidirectional and casts shadows when the participant’s hands get in the way. Furthermore, it also works in only a dark setting, so it is automatically invalid and inactive for a majority of the time! Still, it proved to be a fascinating midnight experience.

The use of light and sound provide opportunities related to ubiquitous computing and hold a place in an interactive system where multiple sensors can control multiple actuators! The variety in possibilities of manifestation allow for a firmer narrative to be created.