FINAL PROTOTYPE

Okay, so given the fact that we have about a week and rags to show for a prototype, doing something wearable is a definite no-go, and if I still feel like this is a path worth going down, I can revisit it again in semester 2 with more time, more preparation and more knowledge as well. Maybe consult people in fashion who can help draft cloth patterns better and also figure out how to block the human body’s natural conductivity from my wires.

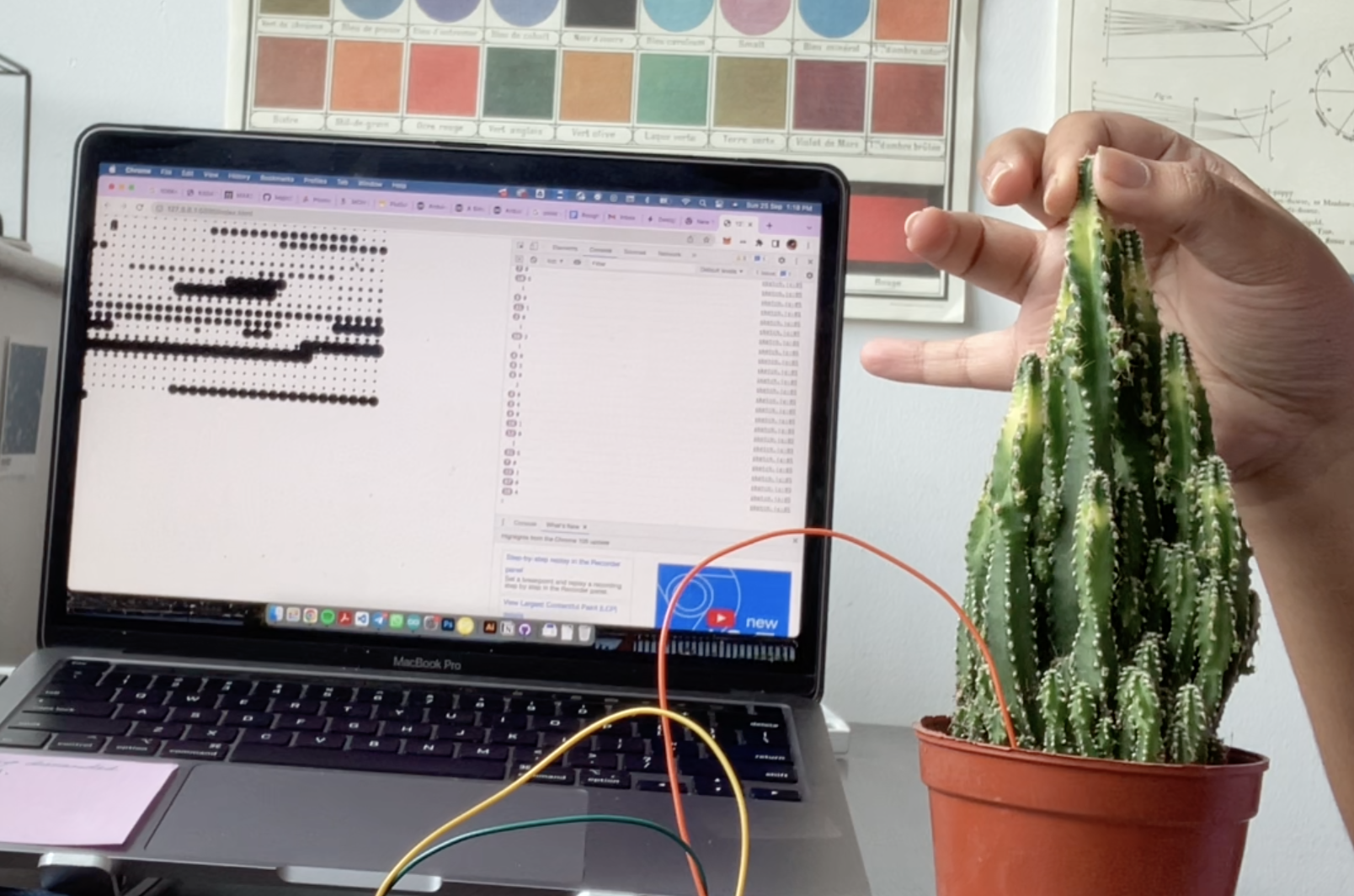

Revisiting my initial explorations with Living Media Interfaces where I started to visually represent the plant signals I was receiving, I realised there was a lot of potential there. I can achieve really interesting results even with the janky capacitive sensor that I have.

Revisiting past ideas.

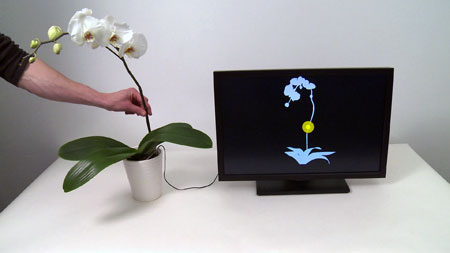

The only problem at the moment is, what value can I add to the pool of existing Living Media Interfaces? Botanicus Interacticus and Interactive Plant Growing use screens.

I don’t know what else I can say with, say, a p5 connection. It’s cool, for sure, but maybe I can see what else I have at my disposal.

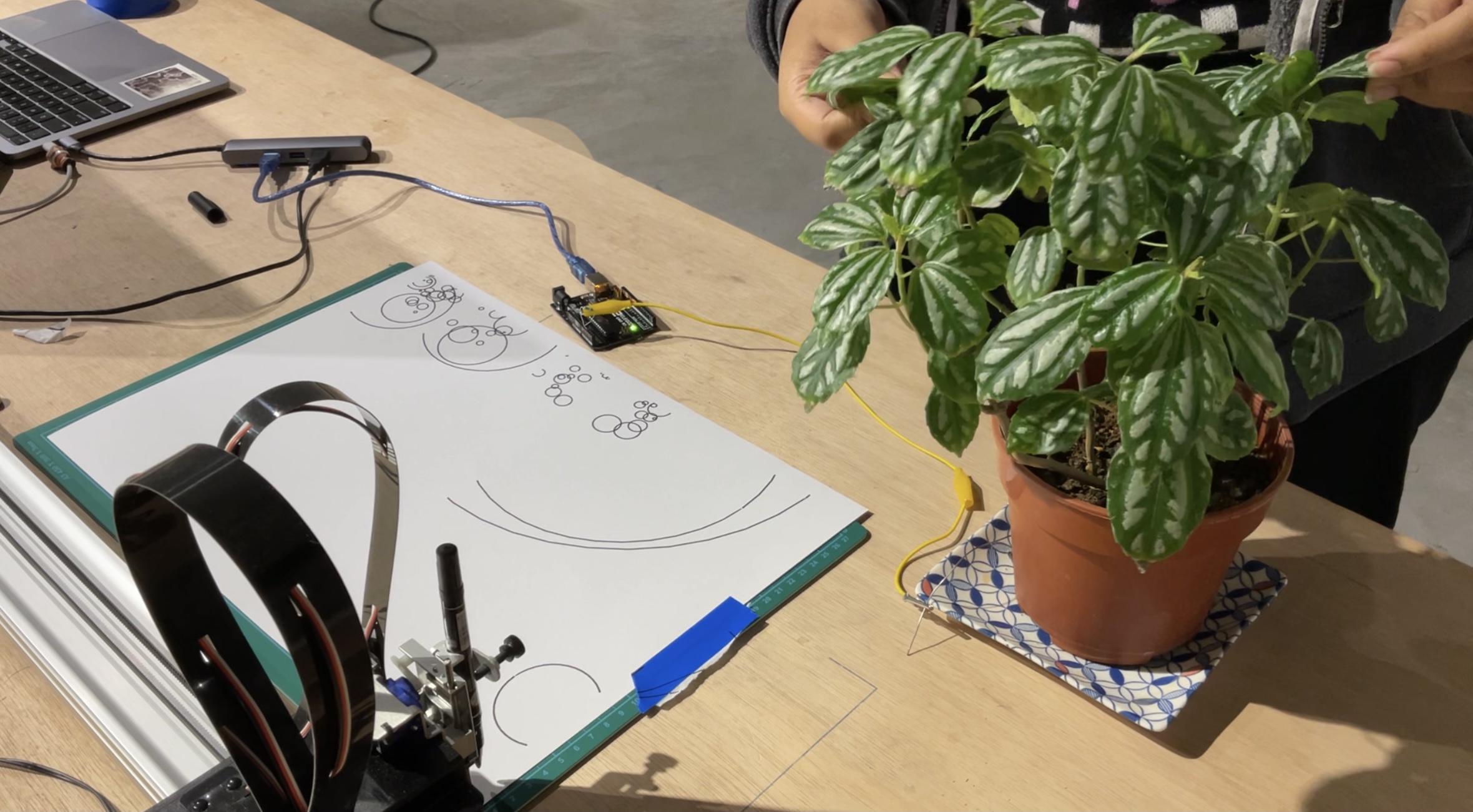

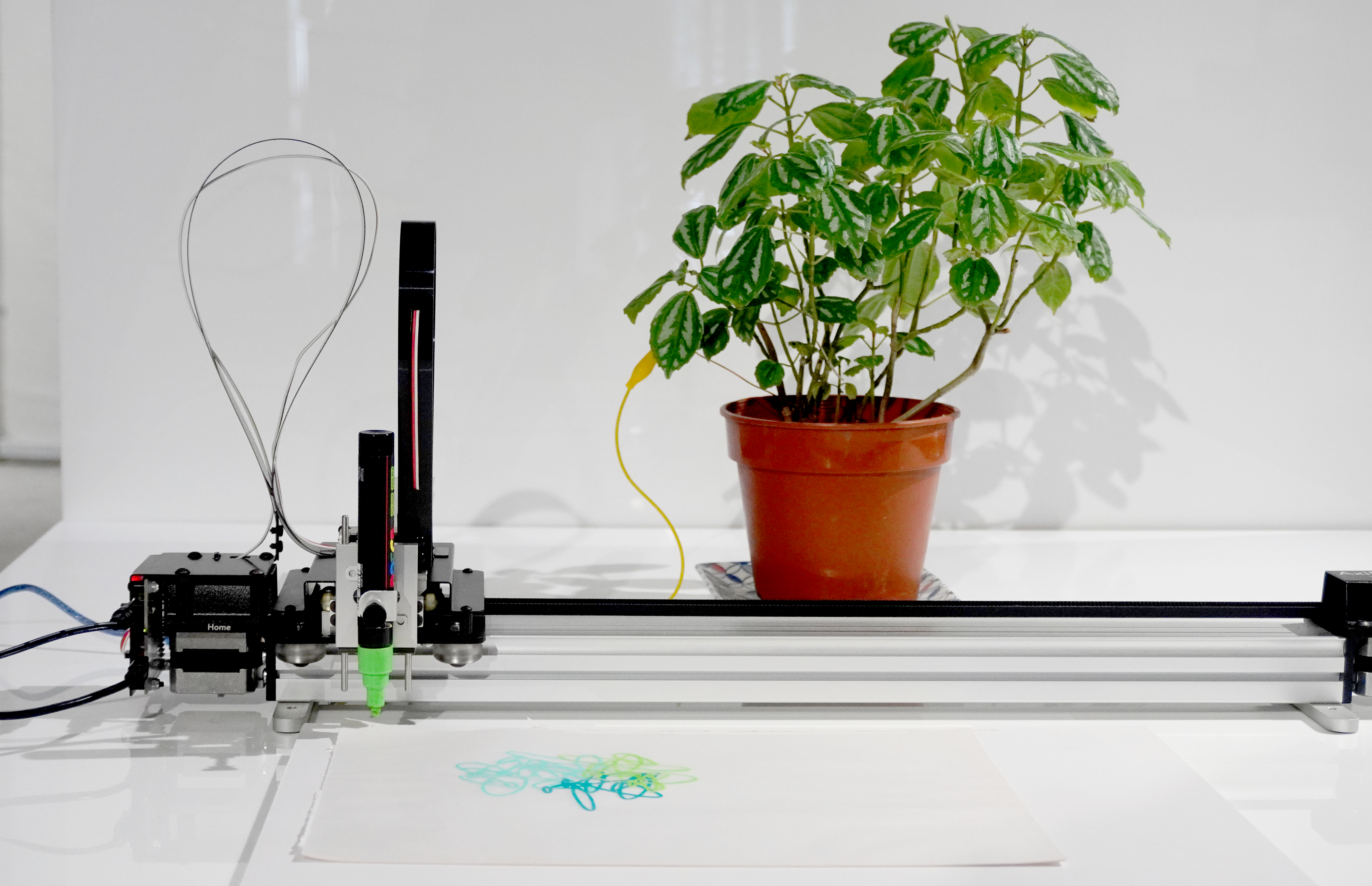

The Axidraw!

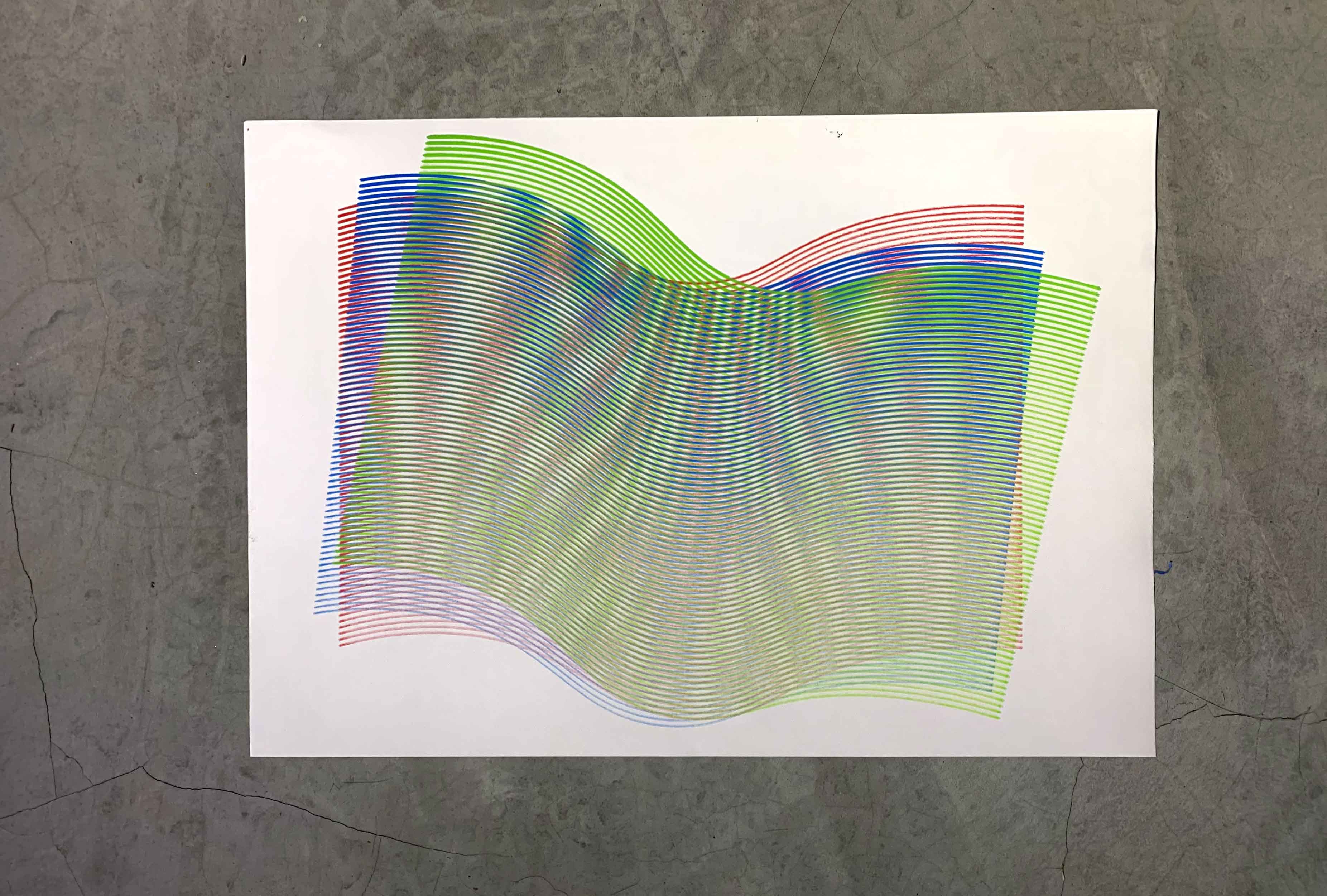

Maybe, the Axidraw, my best best friend. I’ve been using it a lot in preparation for the Orchard Cineleisure exhibition ‘make, shift’. Till now, I’ve been uploading .svg files to it and watching it draw my moire patterns out: but the coolest part is the unpredictability. The table is crooked, the pen runs out, the paper shifts ever so slightly: these ‘problems’ actually end up creating pieces with unique texture, compositions and value.

Referring to David Bowen’s ‘Plant Machete’, something I like is the use of physical mechanisms to create a narrative, and taking human-like qualities like defensiveness but also violence and applying it to the defendant. In the same manner, I wondered if using an Axidraw could help set up conversations between plant and human: we have handwriting, don’t we? What if a means for the plant to communicate presents itself through written media?

I’ve been struggling to really understand what Human Plant Interaction can really mean for both the human and the plant, but using the Axidraw as an actuating device can help solve that. It allows the human to spend some time within this interaction and walk away with a physical token of that representation. Additionally, during this interaction, the process of pruning, plucking out dead leaves and watering the plant, involves many tangible interactions. Could the act of taking care of a plant be the focus? An activity log or certificate could be the outcome.

If I can differentiate between different gestures on the human’s part, that I can solidify the narrative of tactile care and attention to detail.

Some questions to keep in mind when I design this prototype and especially next semester:

The human: what’s in it for them? What value do they extract? How do they come away from this experience with a changed mindset? What discourse am I encouraging?

The plant: what does the plant actually feel? Do plants actually feel, and if they do, how can I extract that? A stress free plant is visibly apparent through signs of health: chlorophyll rich leaves, less droop (depending on species of course) and in general promising signs of growth.

Just like humans are drawn towards intimate, tactile connections, do plants feel the same way?

Figuring out Plant to Axidraw workflow

I figured using serial connections in the same manner as Arduino->P5, aka, making the Arduino write to the serial port and making p5 read from it, would be the best way to start with Axidraw. The only problem is: I was only familiar with uploading .svg files and making the Axidraw draw them all in one go, but that’s no fun. A major focus is real time interactivity.

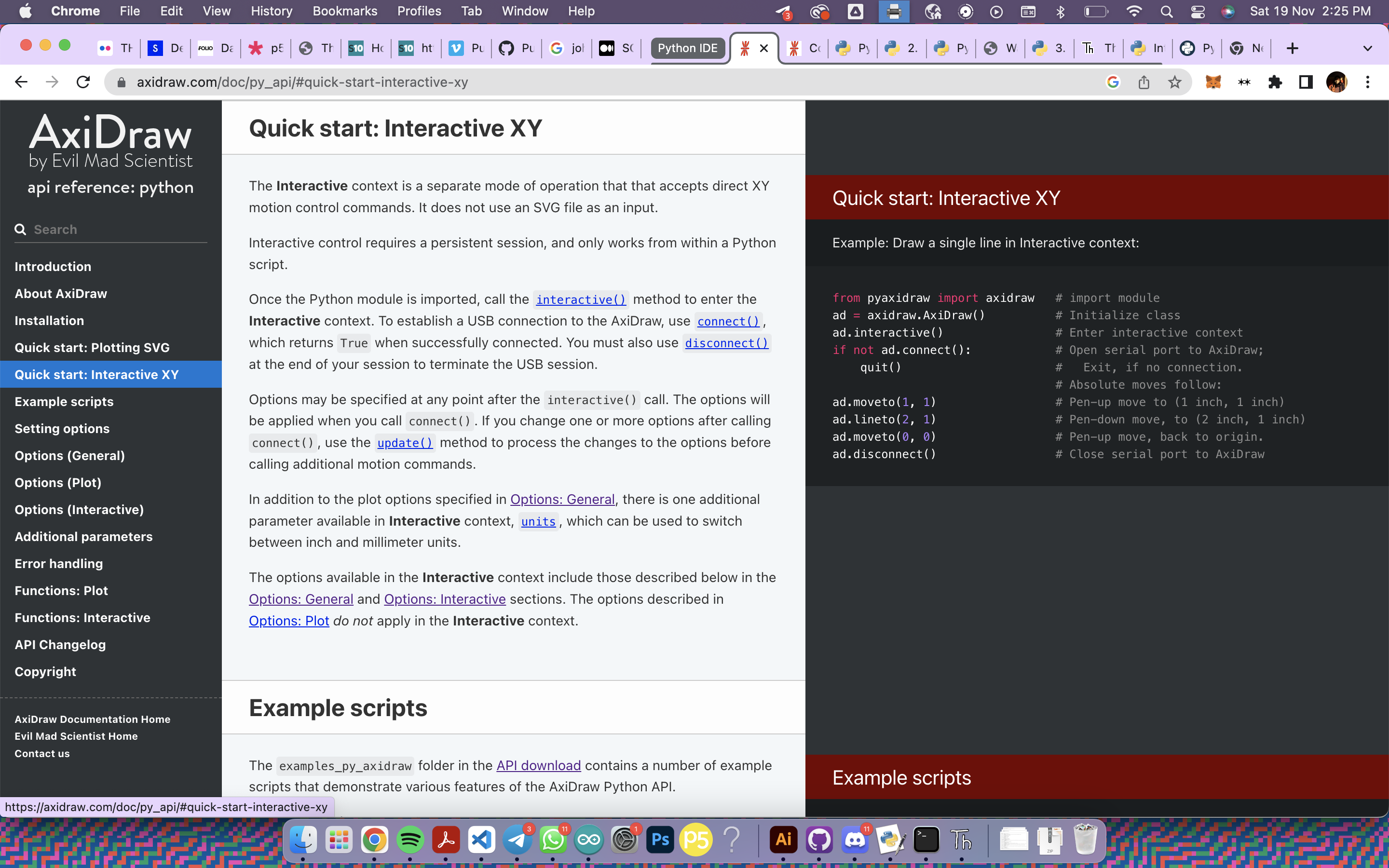

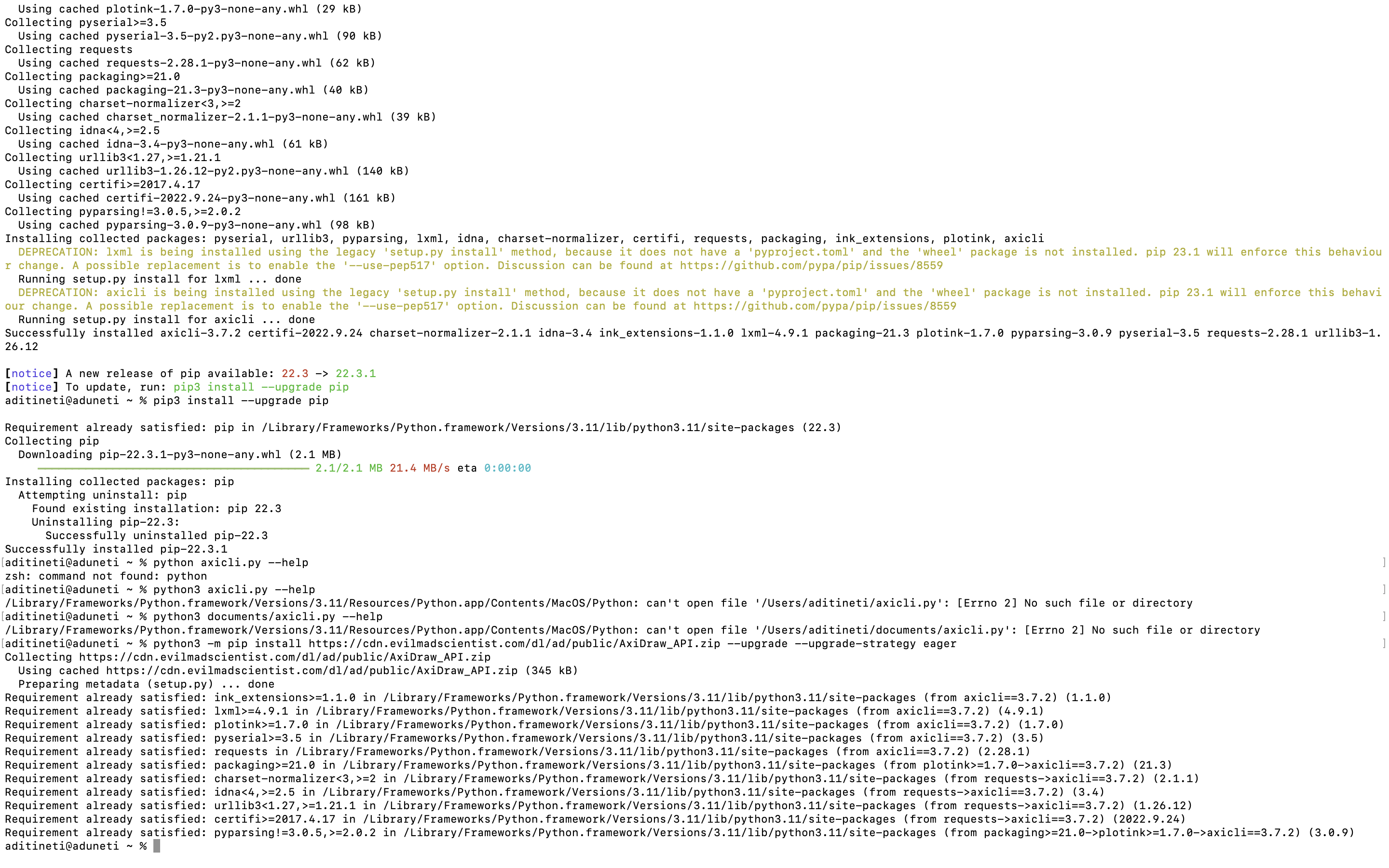

I realised that Axidraw has a python-driven API that contains an interactive xy component. The documentation was amazing, and had clear-cut instructions on how I can go about installing all the necessary softwares on my laptop.

It was a bit of a process, because I also wasn’t all that familiar with terminal. To be really honest, I was kind of intimidated by it because its user interface is the worst and I always used to get random errors that I could fix through other, more user-friendly methods. But I’m just hating, it's a Command Line Interface as the name suggests and once I got familiar with it, I realised how fast I can complete some tasks.

The biggest hurdle for me was python. Unlike JavaScript and C++, which I was semi-familiar with before starting design school, I was tackling python unprepared. I had no other option, though, because the Axidraw API was designed for python.

I think the thing I find most annoying about python are the stupid indentation rules. What is wrong with using brackets to lump code together? They’re not that hard to keep track of too. I kept getting indentation and syntax errors. But, I will admit, the syntax eliminates a lot of unnecessary jargon that comes with other code.

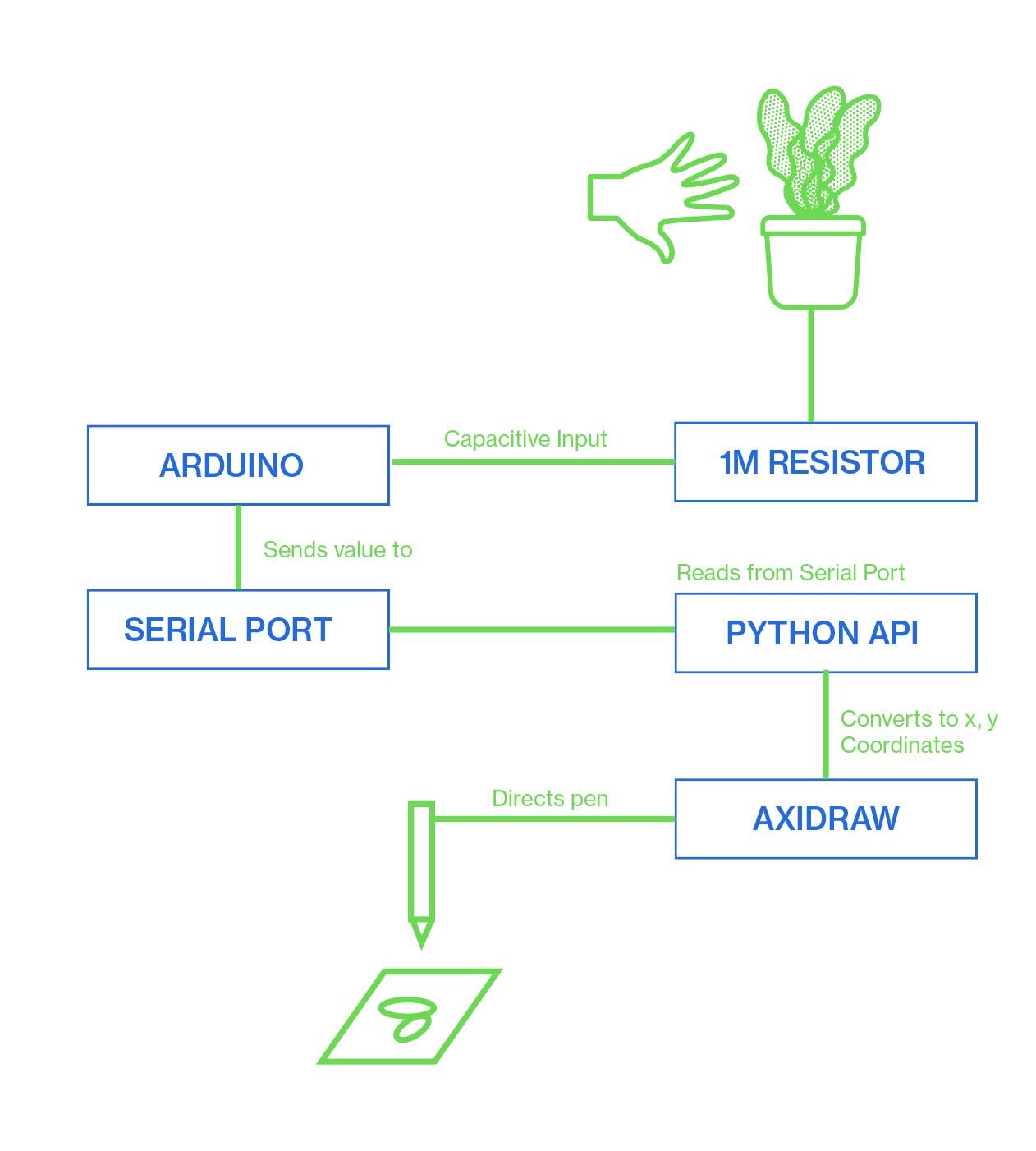

Okay, so the Axidraw API uses python and the Capacitive Sensor for my plant used C++ in Arduino. How can I connect the two together? I found that python has an API called pySerial that allows a code to read from the serial port. Perfect! Furthermore, I can write a code in python that uses the capacitive input and allows it to influence the X, Y actions of the Axidraw.

So, basically, this was my workflow:

I spent some time over the weekend at Orchard Cinelesiure setting this up and it took some trial and tribulation but I finally got it to work!! I am proud of myself 🙂

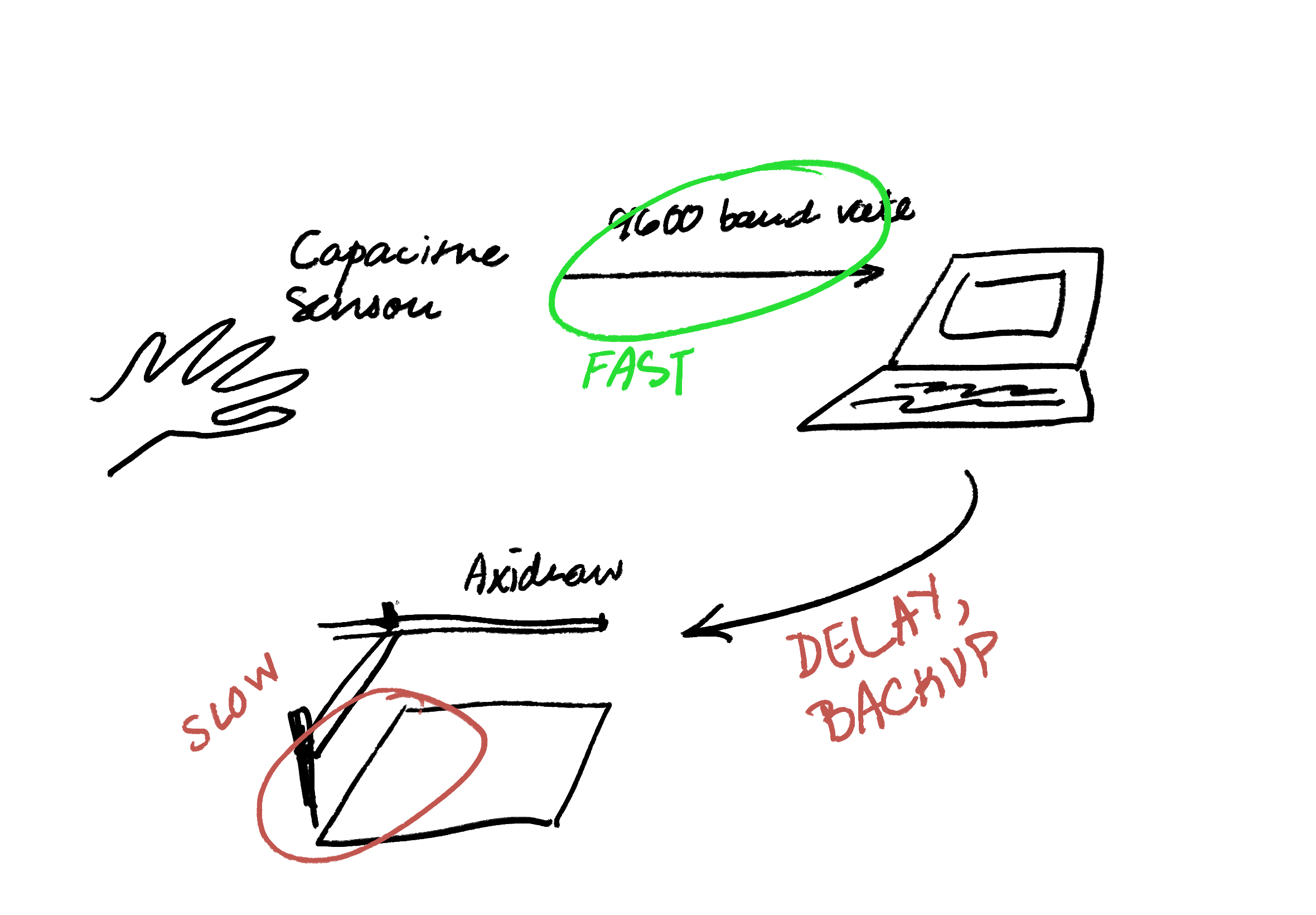

Something I failed to consider before actually connecting the entire system was that, with the Axidraw, real time interaction wasn’t actually possible.

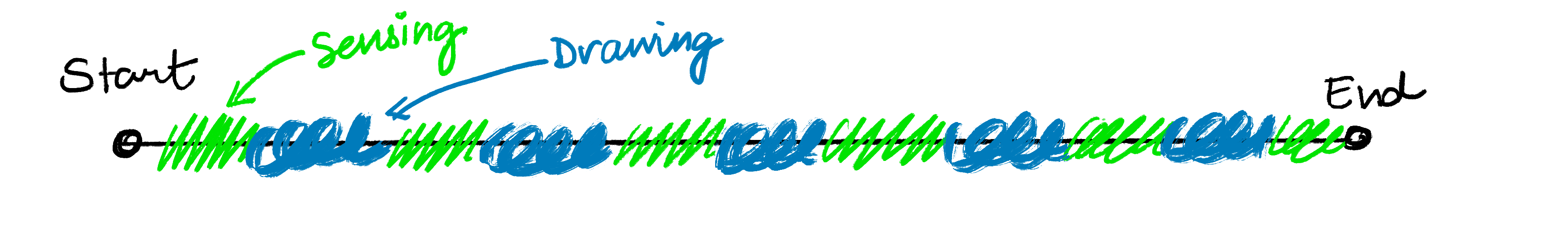

Because the Axidraw took at least 3 seconds to draw something, it would cause somewhat of a traffic jam and then the interaction wouldn’t be real time anymore. To fix this I try something like this:

Where the sensor would take ten readings and pause to give the Axidraw time to draw the resultant of those readings.

For now, this is as real-time as I can get it to be.

The Visual Language

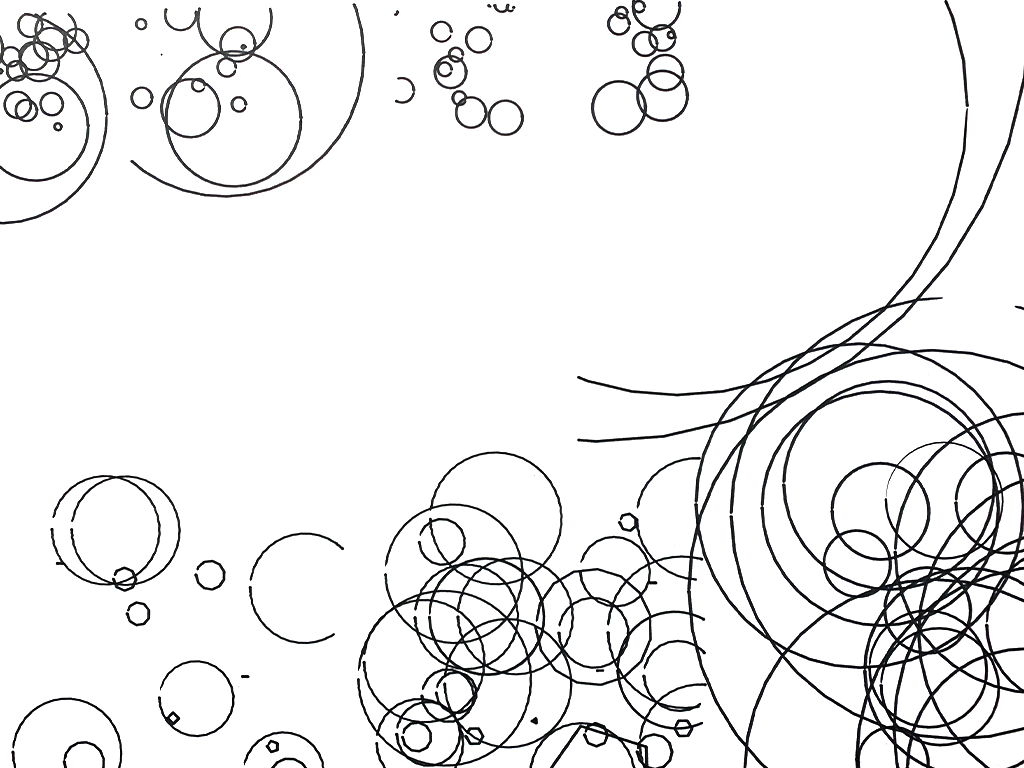

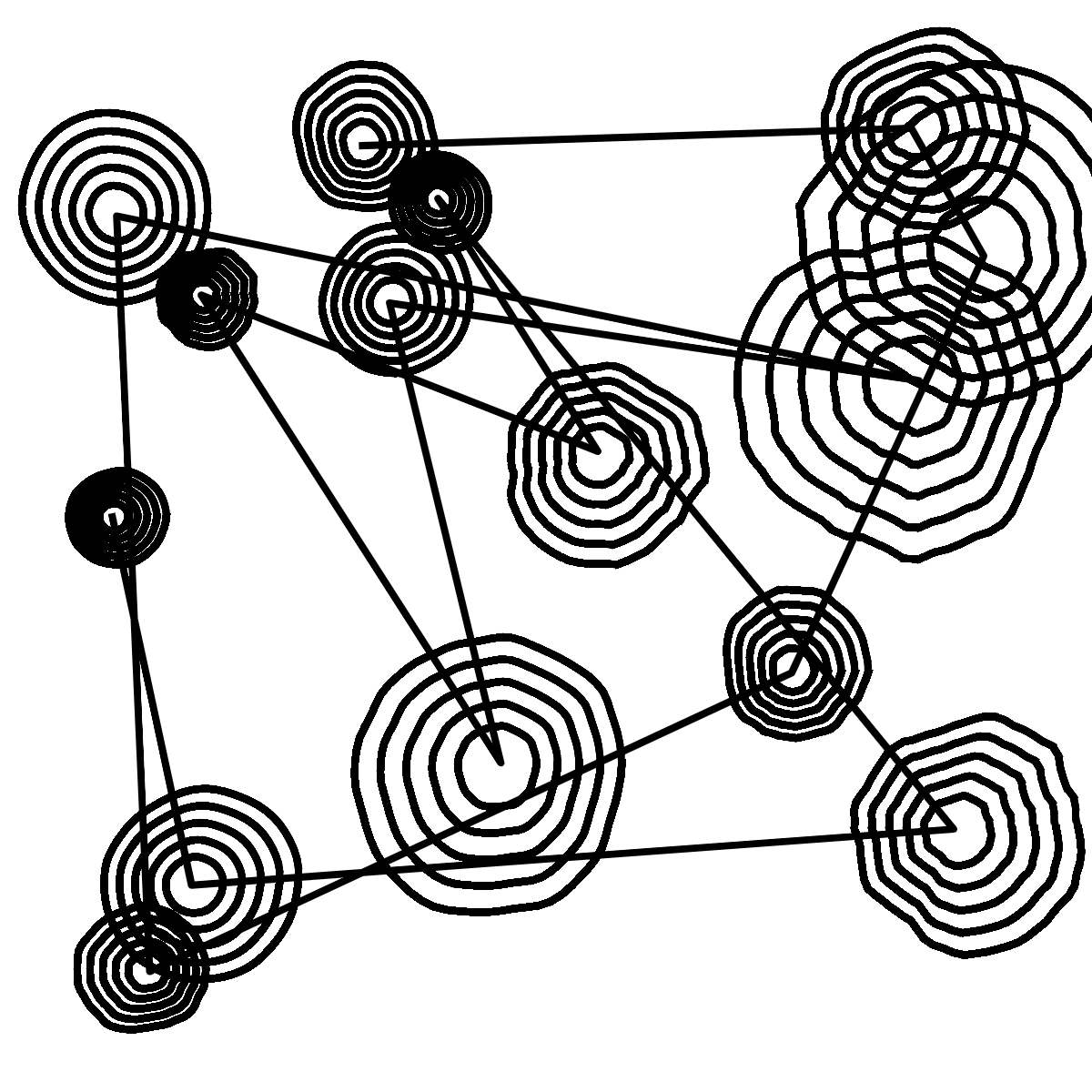

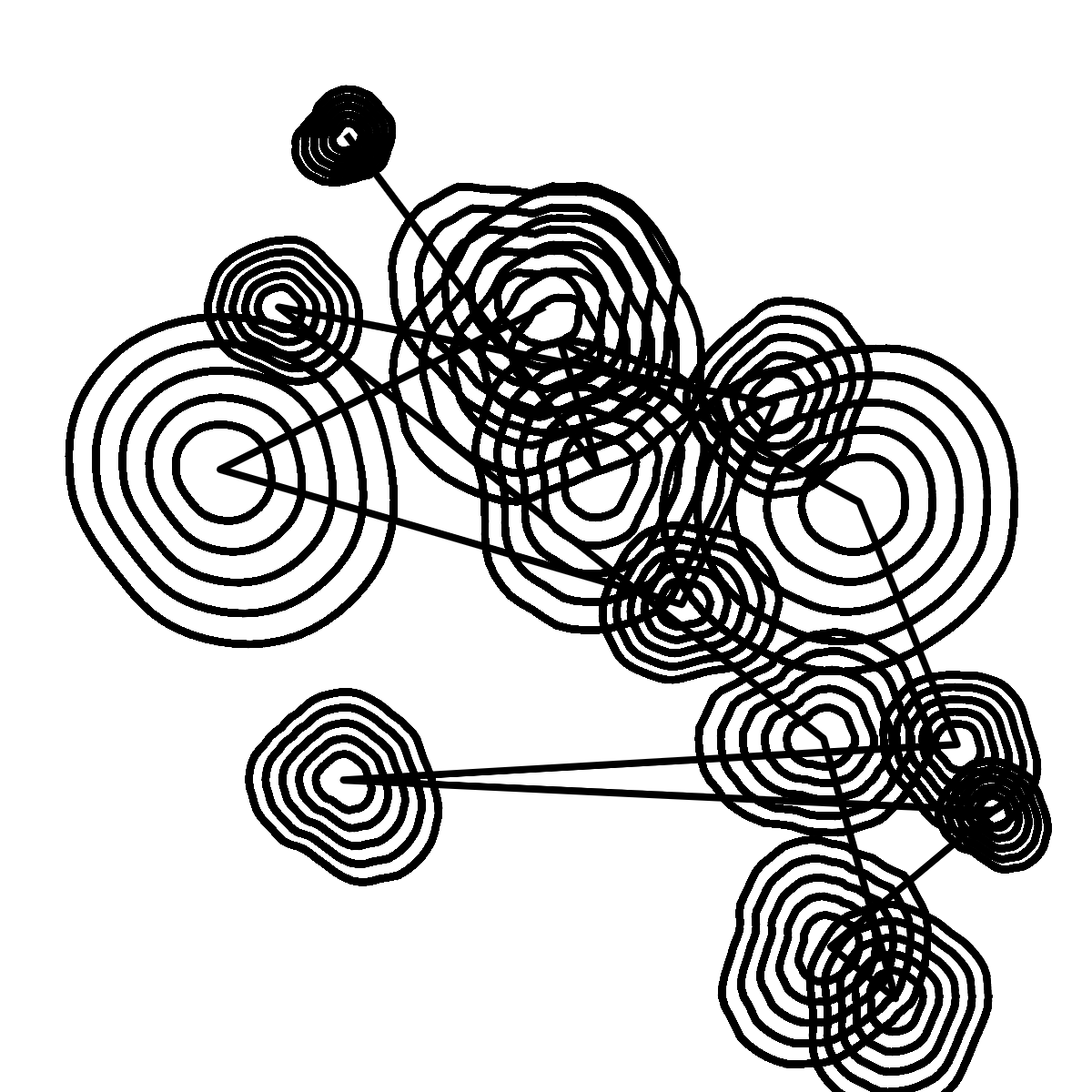

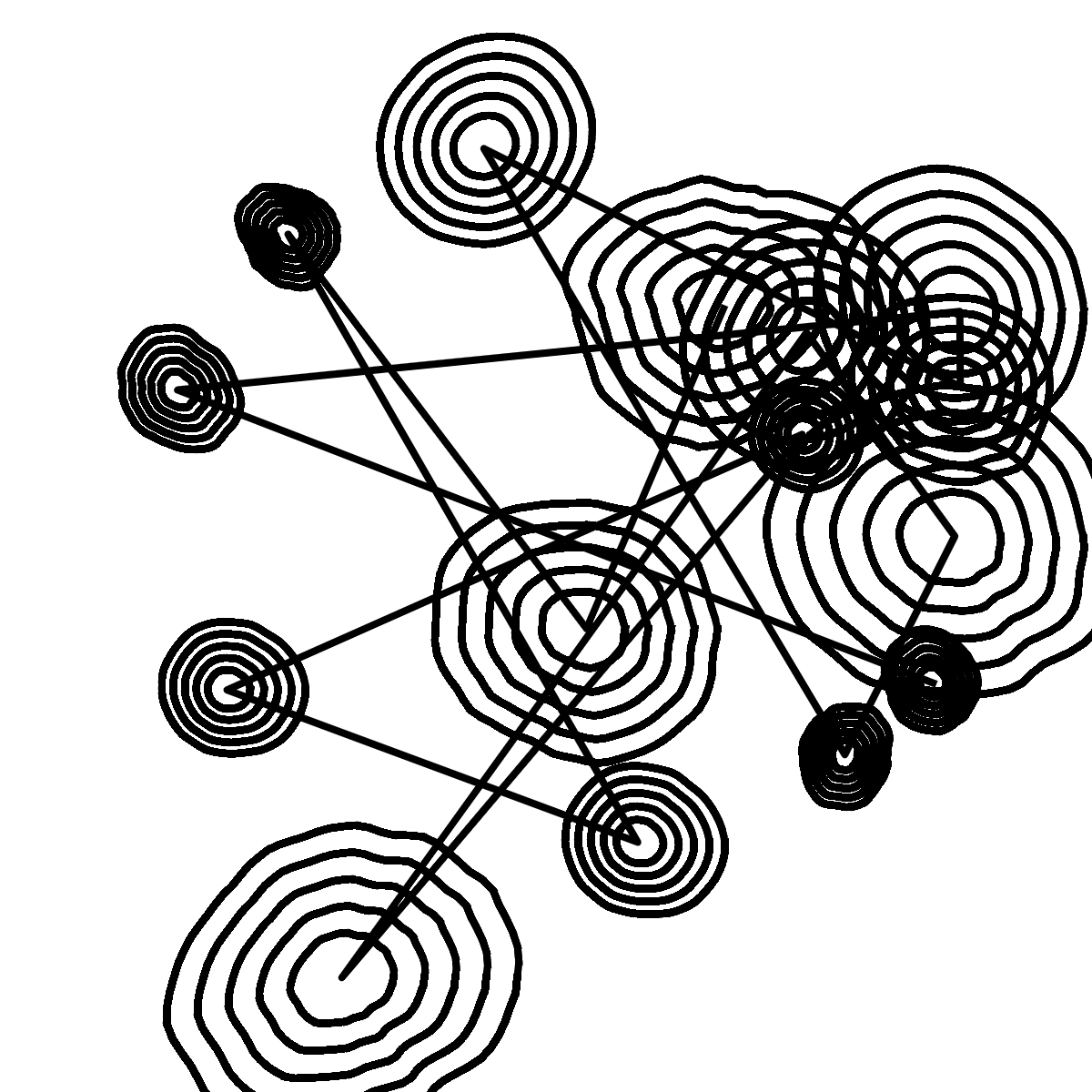

At first, for simplicity’s sake while I got the Axidraw up and running, I used circles as a visual language. The larger the capacitive input, the larger the radius of the circle. Initial experiments resulted in an outcome like so:

This outcome contains drawings from 8 separate interactions: I just shifted the paper when required. The actual drawing space of the Axidraw I set to be very small so it wouldn’t waste time travelling to the target spot.

I was bound by simplicity (to minimise the time Axidraw took to draw out outcomes) so I decided to replace the circles with ellipses, which are a visual callback to the shape of leaves. The ratio of X_radius, Y_radius, tilt and size was defined by the input.

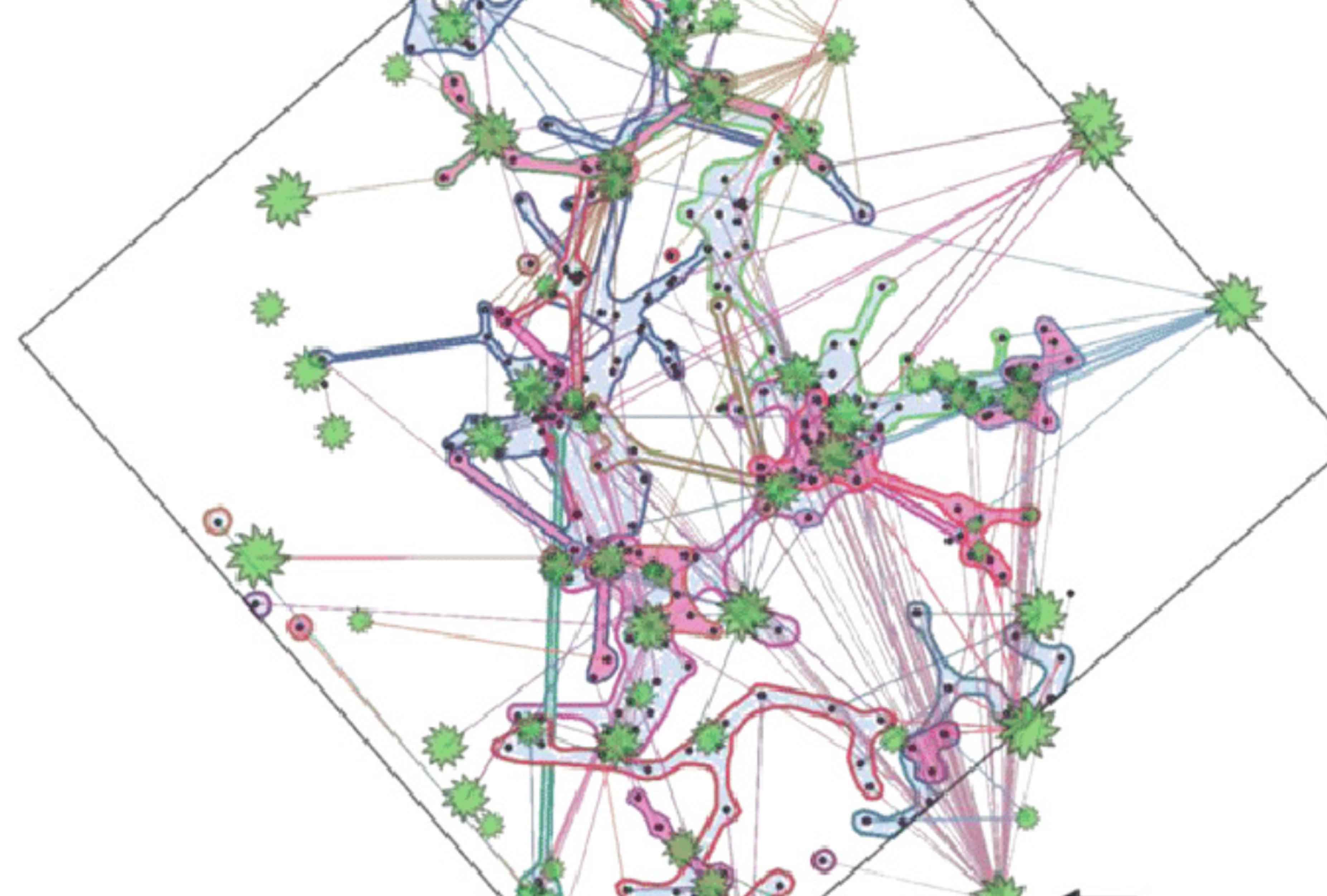

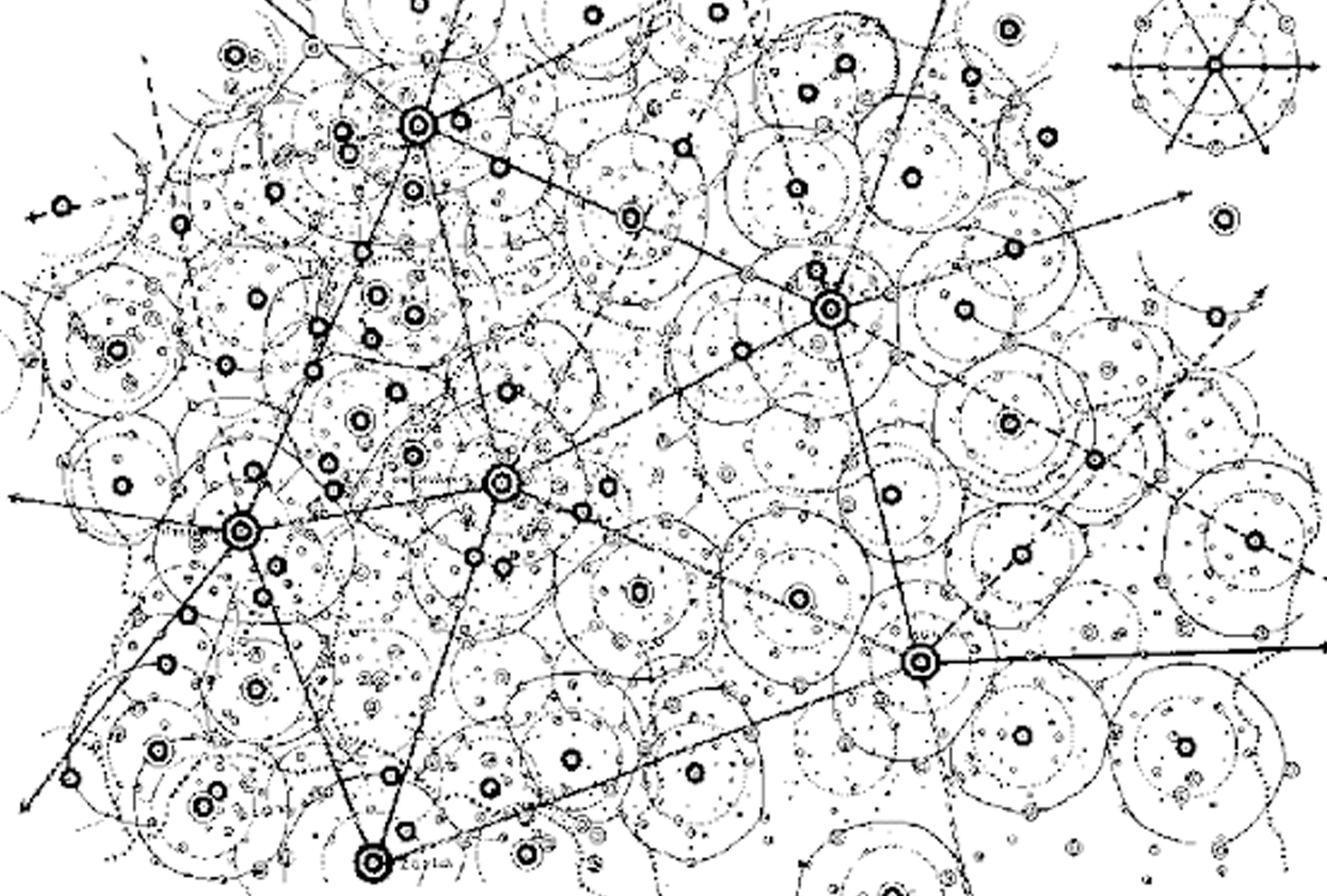

While this is a very simple visual language, I am interested in developing it further. Based on visual imagery of cell structures and internal microscopic plant processes that allow for the transfer of chemical indicators within plant structures, I am working on some p5 sketches that I can hopefully rewrite in python rather than JavaScript and run it through the Axidraw. Because my understanding of python at the moment is super limited, I might just sort of set-up or 'simulate' the Axidraw drawing these more complex shapes in response to plant touch.

Documentation

Setting this up was a huge relief. Actually using it, and interacting with the plant with the Axidraw immediately moving to provide a visual response felt great: I was alone in the Cineleisure space but it was like I had company! The moving arms of the Axidraw seemed like they belonged to the plant and the plant itself was the brain.

Now that this was set up, I had to formally document it. I borrowed Afiq’s camera, got my tripod and iPad, and set up the space like so:

I also used Posca markers as the chosen pen because the ink was super opaque and the nib was thick, giving more volume to every visual outcome. Here’s my setup:

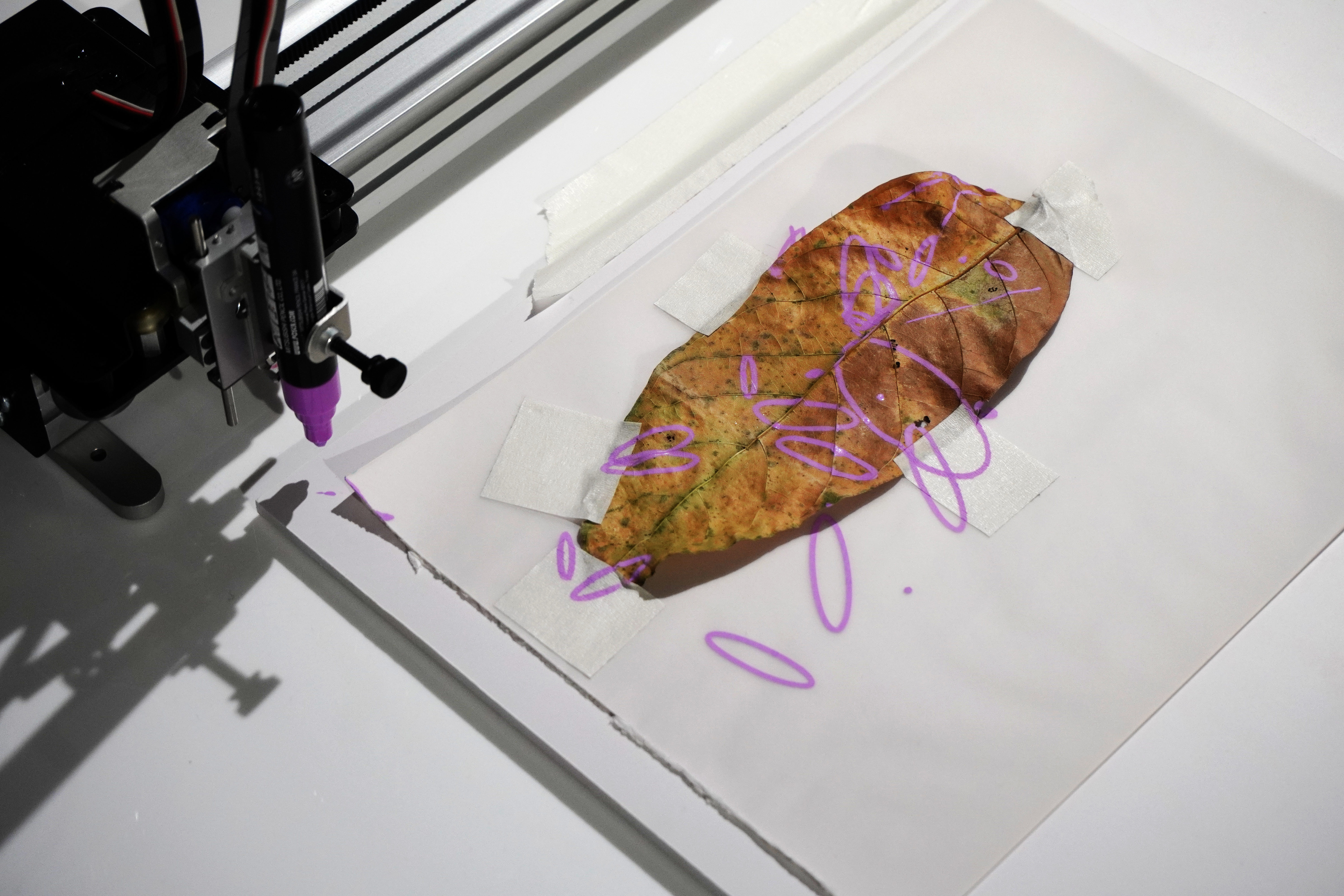

I also tried drawing on a leaf just out of curiosity, but unfortunately it didn’t really work out. Because the leaf is so dimensional, it kept getting caught up with the pen.

I ended up with some clean pictures and videos of the prototype! So I can convey the process a little bit better. I decided to edit together a short 1 minute video demonstrating human-Plant Interaction. It sounded a bit sad and boring without music so I added 04.24 p.m, a track I listen to sometimes when I study or do work.

Here's the video:

I think the captions at the moment are kind of cheesy but they do the job, so I let it be.

Some User Testing

Later, I borrowed the Axidraw Andreas had in school at the McNally campus and set it up so my friends could briefly test it. My documentation of this was less than adequate because I didn’t clear the table before calling people over, nor did I ask for written feedback, which was a huge missed opportunity.

There were two approaches: People who were super delicate with the plant and only fleetingly touched it. On the other hand, there were people who really embraced the plant as an interface and squeezed my cactus! Poor thing.

Positive Feedback it was fascinating to see the Axidraw move in tandem with their interactions. Most kept interacting with the plant during the short gap when their sensing stopped and the Axidraw drew, not realising that the interactions during that period were not recorded. Some explored the nuances of interaction: such as touching the baby leaves and stem and enjoyed the variations in responses.

Negative Feedback the plant was not sensitive enough and figuring out how to increase its sensing capabilities could be a goal. Furthermore, one commented on the unpredictability: occasionally, some expected interactions (delicate touch: small ellipses) went astray and created really large ellipses, though for the most part the system was reliable.

Because of my shabby documentation, I plan to conduct this activity next semester again with a more formal process.

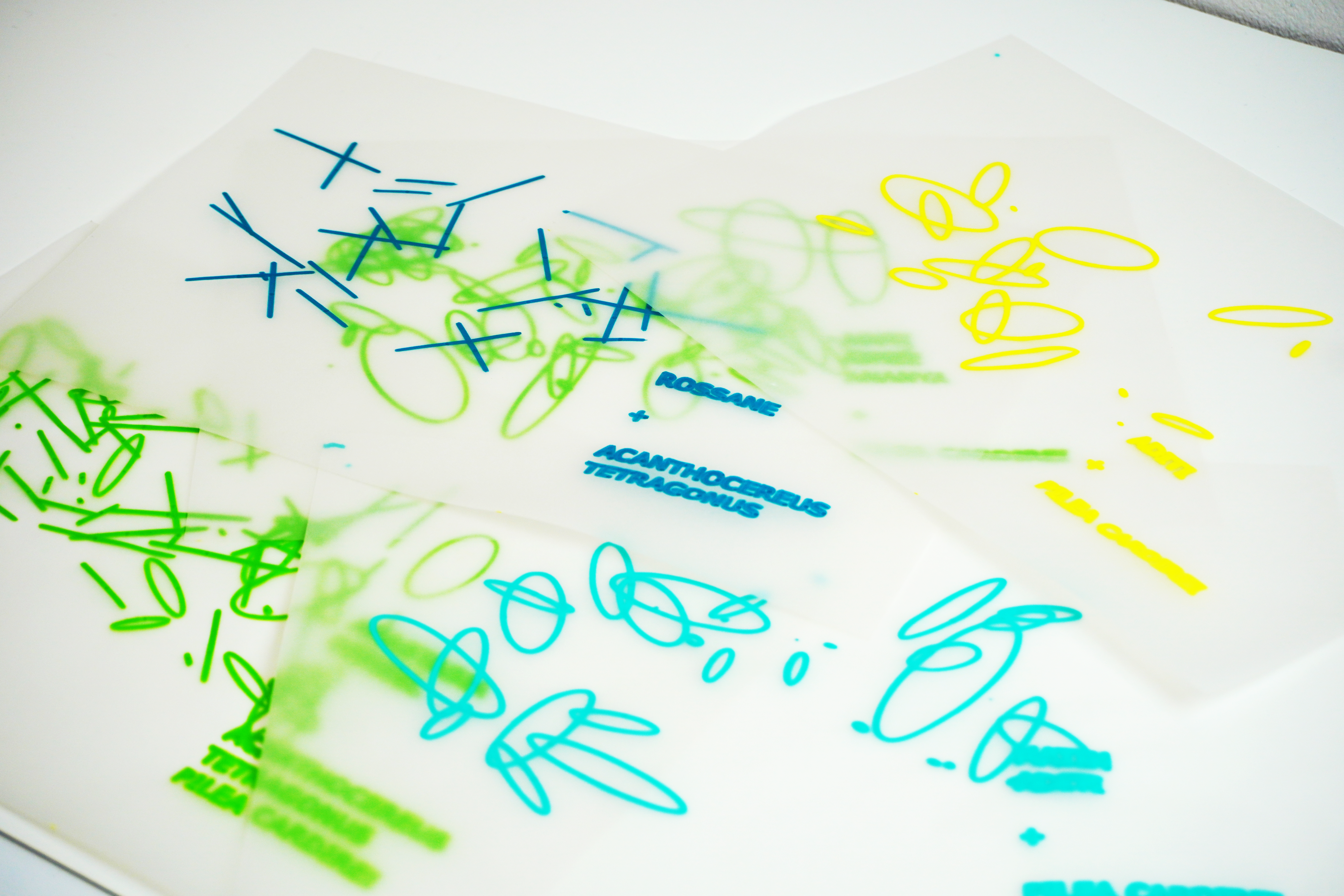

Rough Zine

I had a set of outcomes from this user testing session, so I decided to just make Axidraw write the names of the participating humans and plants and compile it together as a mini zine. Very low fidelity, very rough, but the outcomes looked quite interesting together. The translucent paper was a bit colored and crumpled which added an organic quality to the zine. I called it 'Plantangible', get it?

Reflection

My final prototype came together very last-minute, and I kept changing my mind and not being able to commit, but I am happy with the Axidraw one. It shows potential, and how different machines and inputs can come together to create a system, which is what ubiquitous computing is all about. Right now, not everything is seamless or unnoticeable, but it serves as a good starting point that I can work on and develop more next semester.

Andreas is worried that I am going to come back after December with an entirely new idea, haha. I promise I am committing! There is a lot of space for various forms of exploration within this current prototype concept I have, like integrating sound, light and other visual media as actuators.

Semester 1 was all about exploring and understanding what a Living Media Interface entails, which meant dealing with plants on a more intimate basis and subjecting them to (mostly) non-invasive probing, wire-fitting and tactile interacting. I dug up plants, sowed new seeds and watched as baby leaves emerged while wilted leaves shrivelled up. I always had plants to take care of even before this project, but turning them into interfaces really cemented my attachment to them. I would see more active responses after I had tended to and watered them, and their leaves would feel healthier and firmer. When they drooped, I would feel a pang of guilt. This process of tangible interactions really made me care much more deeply for them, and I hope they care about me too (though they are my poor test subjects). If developing these Living Media Interfaces deepened my relationship with nature, I have faith that it will for others as well.

Some experiments were successful and opened up avenues for further exploration while others took me down a dead end. I hit technical walls, dealt with some engineering-type problems that I could not solve and got impatient because I existed on a different temporal agenda when compared to my beloved plants, who like to take their own time. Nature is also really unpredictable, but that’s where the interesting part comes in, through growth and decay.

I also learnt a whole bunch of new things and softwares, like python, serial communication and in general physical computing! I used to look at softwares in isolation, for instance, a p5 sketch would remain in its environment of the p5.js editor, but now I am used to cross referencing things more. My HTML/CSS has also improved, but something still lacking is responsiveness and designing for mobile. (Praying that no one opens my Catalogue or CPJ on their phones)

I think something that held me back a little this semester is my own frustration. Term 1 started off well enough, but in term 2 when I started hitting technical roadblocks that I couldn’t solve I just kind of lost motivation. It’s okay, I have a month in December to get my mojo back.

Some things to get checked off my list this December:

Secure interviews with relevant botanical scientists and interaction designers so my research has accuracy and foundational basis

Continue experimenting with modes of input and output

Start developing a visual language as a means of representation of plant input data

Buy necessary sensors and components in Bangalore (where it’s cheap heheh)

Eliminate noise from the Capacitive sensor